At Mozilla we’re running performance tests against Firefox OS devices several times a day, and you can see these results on our dashboard. Unfortunately it takes a while to run these tests, which means we’re not able to run them against each and every push, and therefore when a regression is detected we can have a tough time determining the cause.

We do of course have several different types of performance testing, but for the purposes of this post I’m going to focus on the cold launch of applications measured by b2gperf. This particular test launches 15 of the packaged applications (each one is launched 30 times) and measures how long it takes. Note that this is how long it takes to launch the app, and not how long it takes for the app to be ready to use.

In order to assist with tracking down performance regressions I have written a tool to discover any Firefox OS builds generated after the last known good revision and before the first known bad revision, and trigger additional tests to fill in the gaps. The results are sent via e-mail for the recipient to review and either revise the regression range or (hopefully) identify the commit that caused the regression.

Before I talk about how to use the tool, there’s a rather important prerequisite to using it. As our continuous integration solution involves Jenkins, you will need to have access to an instance with at least one job configured specifically for this purpose.

The simplest approach is to use our Jenkins instance, which requires Mozilla-VPN access and access to our tinderbox builds. If you have these you can use the instance running at http://selenium.qa.mtv2.mozilla.com:8080 and the b2g.hamachi.perf job.

Even if you have the access to our Jenkins instance and the device builds, you may still want to set up a local instance. This will allow you to run the tests without tying up the devices we have dedicated to running these tests, and you wont be contending for resources. If you’re going to set up a local instance you will of course need at least one Firefox OS device and access to tinderbox builds for the device.

You can download the latest long-term support release (recommended) of Jenkins from here. Once you have that, run java -jar jenkins.war to start it up. You’ll be able to see the dashboard at http://localhost:8080 where you can create a new job. The job must accept the following parameters, which are sent by the command line tool when it triggers jobs.

BUILD_REVISION – This will be populated with the revision of the build that will be tested.BUILD_TIMESTAMP – A formatted timestamp of the selected build for inclusion in the e-mail notification.BUILD_LOCATION – The URL of build to download.APPS – A comma separated names of the applications to test.NOTIFICATION_ADDRESS – The e-mail address to send the results to.

Your job can then use these parameters to run the desired tests. There are a few things I’d recommend, which we’re using for our instance. If you have access to our instance it may also make sense to use the b2g.hamachi.perf job as a template for yours:

- Install the Workspace Cleanup plugin, and wipe out the workspace before your build starts. This will ensure that no artifacts left over from a previous build will affect your results.

- Use the Build Timeout plugin with a reasonable timeout to prevent a failing device flash to stall indefinitely.

- It’s likely that the job will download the build referenced in

$BUILD_LOCATIONso you’ll need to make sure you include a valid username and password. You can inject passwords to the build as environment variables to prevent them from being exposed. - The build files often include a version number, which you won’t want to hard-code as it will change every six weeks. The following shell code uses

wgetto download the file using a wildcard:

wget -r -l1 -nd -np -A.en-US.android-arm.tar.gz --user=$USERNAME --password=$PASSWORD $BUILD_LOCATION mv b2g-*.en-US.android-arm.tar.gz b2g.en-US.android-arm.tar.gz

- Depending on the tests you’ll be running, you’ll most likely want to split the

$APPSvariable and run your main command against each entry. The following shell script shows how we’re doing this for running b2gperf:

while [ "$APPS" ]; do APP=${APPS%%,*} b2gperf --delay=10 --sources=sources.xml --reset "$APP" [ "$APPS" = "$APP" ] && APPS='' || APPS="${APPS#*,}" done

- With the Email-ext plugin, you can customise the content and triggers for the e-mail notifications. For our instance I have set it to always trigger, and to attach the console log. For the content, I have included the various parameters as well as used the following token to extract the b2gperf results:

${BUILD_LOG_REGEX, regex=".* Results for (.*)", maxMatches=0, showTruncatedLines=false, substText="$1"}Once you have a suitable Jenkins instance and job available, you can move onto triggering your tests. The quickest way to install the b2ghaystack tool is to run the following in a terminal:

pip install git+git://github.com/davehunt/b2ghaystack.git#egg=b2ghaystack

Note that this requires you to have Python and Git installed. I would also recommend using virtual environments to avoid polluting your global site-packages.

Once installed, you can get the full usage by running b2ghaystack --help but I’ll cover most of these by providing the example of taking a real regression identified on our dashboard and narrowing it down using the tool. It’s worth calling out the --dry-run argument though, which will allow you to run the tool without actually triggering any tests.

The tool takes a regression range and determines all of the pushes that took place within the range. It will then look at the tinderbox builds available and try to match them up with the revisions in the pushes. For each of these builds it will trigger a Jenkins job, passing the variables mentioned above (revision, timestamp, location, apps, e-mail address). The tool itself does not attempt to analyse the results, and neither does the Jenkins job. By passing an e-mail address to notify, we can send an e-mail for each build with the test results. It is then up to the recipient to review and act on them. Ultimately we may submit these results to our dashboard, where they can fill in the gaps between the existing results.

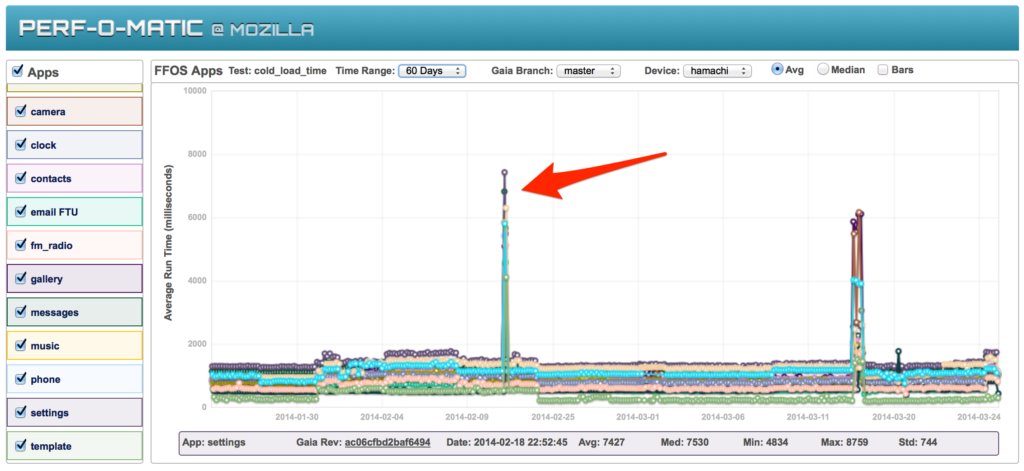

The regression I’m going to use in my example was from February, where we actually had an issue preventing the tests for running for a week. When the issue was resolved, the regression presented itself. This is an unusual situation, but serves as a good example given the very wide regression range.

Below you can see a screenshot of this regression on our B2G dashboard. The regression is also available to see on our generic dashboard.

It is necessary to determine the last known good and first known bad gecko revisions in order to trigger tests for builds in between these two points. At present, the dashboard only shows the git revisions for our builds, but we need to know the mercurial equivalents (see bug 979826). Both revisions are present in the sources.xml available alongside the builds, and I’ve been using this to translate them.

For our regression, the last known good revision was 07739c5c874f from February 10th, and the first known bad was 318c0d6e24c5 from February 17th. I first ran this against the mozilla-central branch:

b2ghaystack -b mozilla-central --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf 07739c5c874f 318c0d6e24c5

-b mozilla-central specifies the target branch to discover tinderbox builds for.--eng means the builds selected will have the necessary tools to run my tests.-a Settings limits my test to just the Settings app, as it’s one of the affected apps, and means my jobs will finish much sooner.-u username and -p password are my credentials for accessing the device builds.-j http://localhost:8080 is the location of my Jenkins instance.-e dhunt@mozilla.com is where I want the results to be sent.hamachi is the device I’m testing against.b2g.hamachi.perf is the name of the job I’ve set up in Jenkins. Finally, the last two arguments are the good and bad revisions as determined previously.

This discovered 41 builds, but to prevent overloading Jenkins the tool only triggers a maximum of 10 builds (this can be overridden using the -m command line option). The ten builds are interspersed from the 41, and had the range of f98c5c2d6bba:4f9f58d41eac.

Here’s an example of what the tool will output to the console:

Getting revisions from: https://hg.mozilla.org/mozilla-central/json-pushes?fromchange=07739c5c874f&tochange=318c0d6e24c5 --------> 45 revisions found Getting builds from: https://pvtbuilds.mozilla.org/pvt/mozilla.org/b2gotoro/tinderbox-builds/mozilla-central-hamachi-eng/ --------> 301 builds found --------> 43 builds within range --------> 40 builds matching revisions Build count exceeds maximum. Selecting interspersed builds. --------> 10 builds selected (f98c5c2d6bba:339f0d450d46) Application under test: Settings Results will be sent to: dhunt@mozilla.com Triggering b2g.hamachi.perf for revision: f98c5c2d6bba (1392094613) Triggering b2g.hamachi.perf for revision: bd4f1281c3b7 (1392119588) Triggering b2g.hamachi.perf for revision: 3b3ac98e0dc1 (1392183487) Triggering b2g.hamachi.perf for revision: 7920df861c8a (1392222443) Triggering b2g.hamachi.perf for revision: a2939bac372b (1392276621) Triggering b2g.hamachi.perf for revision: 6687d299c464 (1392339255) Triggering b2g.hamachi.perf for revision: 0beafa155ee9 (1392380212) Triggering b2g.hamachi.perf for revision: f6ab28f98ee5 (1392434447) Triggering b2g.hamachi.perf for revision: ed8c916743a2 (1392488927) Triggering b2g.hamachi.perf for revision: 339f0d450d46 (1392609108)

None of these builds replicated the issue, so I took the last revision, 4f9f58d41eac and ran again in case there were more builds appropriate but previously skipped due to the maximum of 10:

b2ghaystack -b mozilla-central --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf 4f9f58d41eac 318c0d6e24c5

This time no builds matched, so I wasn’t going to be able to reduce the regression range using the mozilla-central tinderbox builds. I move onto the mozilla-inbound builds, and used the original range:

b2ghaystack -b mozilla-inbound --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf 07739c5c874f 318c0d6e24c5

Again, no builds matched. This is most likely because we only retain the mozilla-inbound builds for a short time. I moved onto the b2g-inbound builds:

b2ghaystack -b b2g-inbound --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf 07739c5c874f 318c0d6e24c5

This found a total of 187 builds within the range 932bf66bc441:9cf71aad6202, and 10 of these ran. The very last one replicated the regression, so I ran again with the new revisions:

b2ghaystack -b b2g-inbound --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf e9025167cdb7 9cf71aad6202

This time there were 14 builds, and 10 ran. The penultimate build replicated the regression. Just in case I could narrow it down further, I ran with the new revisions:

b2ghaystack -b b2g-inbound --eng -a Settings -u username -p password -j http://localhost:8080 -e dhunt@mozilla.com hamachi b2g.hamachi.perf b2085eca41a9 e9055e7476f1

No builds matched, so I had my final regression range. The last good build was with revision b2085eca41a9 and the first bad build was with revision e9055e7476f1. This results in a pushlog with just four pushes.

Of these pushes, one stood out as a possible cause for the regression: Bug 970895: Use I/O loop for polling memory-pressure events, r=dhylands The code for polling sysfs for memory-pressure events currently runs on a separate thread. This patch implements this functionality for the I/O thread. This unifies the code base a bit and also safes some resources.

It turns out this was reverted for causing bug 973824, which was a duplicate of bug 973940. So, regression found!

Here’s an example of the notification e-mail content that our Jenkins instance will send:

b2g.hamachi.perf - Build # 54 - Successful: Check console output at http://selenium.qa.mtv2.mozilla.com:8080/job/b2g.hamachi.perf/54/ to view the results. Revision: 2bd34b64468169be0be1611ba1225e3991e451b7 Timestamp: 24 March 2014 13:36:41 Location: https://pvtbuilds.mozilla.org/pvt/mozilla.org/b2gotoro/tinderbox-builds/b2g-inbound-hamachi-eng/20140324133641/ Results: Settings, cold_load_time: median:1438, mean:1444, std: 58, max:1712, min:1394, all:1529,1415,1420,1444,1396,1428,1394,1469,1455,1402,1434,1408,1712,1453,1402,1436,1400,1507,1447,1405,1444,1433,1441,1440,1441,1469,1399,1448,1447,1414

Hopefully this tool will be useful for determining the cause for regressions much sooner than we are currently capable of doing. I’m sure there are various improvements we could make to this tool – this is very much a first iteration! Please file bugs and CC or needinfo me (:davehunt), or comment below if you have any thoughts or concerns.